Key Considerations to Sustain Cyber Resilience in the Era of AI

Artificial Intelligence has ingrained itself into almost every facet of our lives, profoundly affecting the way we live and work. Despite its multitude of benefits, the security implications of AI tools often remain an overlooked aspect, creating potential threats to company data. As these tools become more prevalent and sophisticated, it’s crucial for cybersecurity leaders to recognize and address these concerns to prevent sensitive data leaks and to ensure cyber resilience.

Cybersecurity Concerns in AI Tools

Integrating AI tools into various aspects of business and consumer life has been revolutionary, but it comes with its own set of challenges. One of the key issues is the concern over weak security controls within the organization, especially with AI startups, which often lack robust security controls due to resource constraints. This lack of adequate protection mechanisms opens the door for potential cyber-attacks, leading to theft and misuse of user data.

AI startups, in their pursuit of developing cutting-edge technologies, sometimes overlook the importance of implementing rigorous security measures like access management, vulnerability management and others. This, coupled with their focus on growth and market capture, can inadvertently create potential loopholes for cybercriminals. The result could be devastating—from the theft of sensitive customer data, such as financial or personal identifiable information details, to significant reputational damage and financial loss for the company.

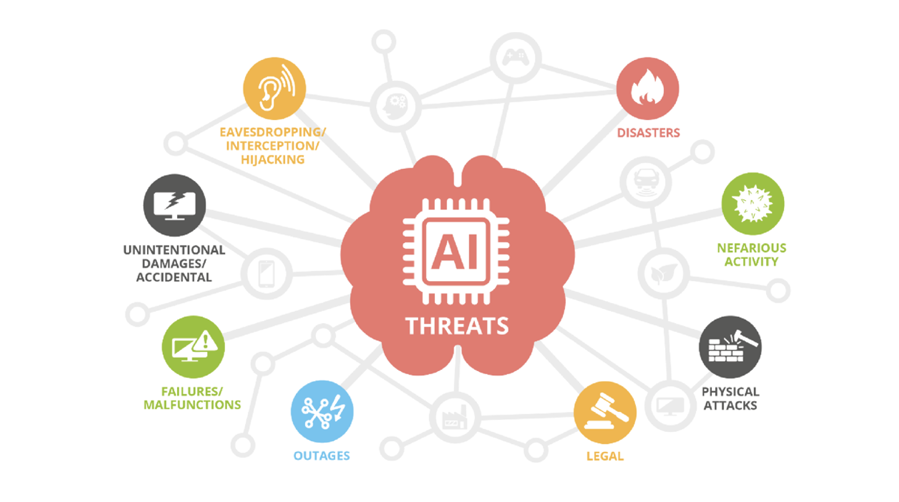

Source: ENISA. AI Cybersecurity Challenges. Threat Landscape for Artificial Intelligence

The Risk of Corporate Information Exposure

While AI tools streamline processes and enhance productivity, they inadvertently introduce additional risks to the organizations. Companies can develop an AI tool as a proprietary system or use it as a third-party tool. When considering any type of AI tool, you should know of several risks related to using of AI tools with company’s or customer’s data.

Data Privacy and Confidentiality Risks

With AI tools in the business environment, organizations are continuously processing vast amounts of data, some of which are highly sensitive. AI tools need access to this data to function effectively. However, if not managed correctly, it can lead to serious data privacy and confidentiality risks. A breach could expose customers’ personal data or proprietary business information. Hence, robust access management and data protection controls should supplement AI tool usage with stringent access controls, secure data storage, and routine audits to ensure compliance and detect any anomalies swiftly.

ChatGPT has an "absence of any legal basis that justifies the massive collection and storage of personal data" to "train" the chatbot, Garante said.

The agency, also known as Garante, accused Microsoft Corp-backed ChatGPT of failing to check the age of its users who are supposed to be 13 and up.

Source: https://www.cbc.ca/news/world/italy-openai-chatgpt-ban-1.6797963

Accidental Data Leakage

AI tools, especially when used for data analysis, can inadvertently expose confidential data. Training AI tools requires a massive amount of data. While processing, data might be stored temporarily in unsecured locations or transmitted through vulnerable channels, leading to accidental data leakage. Misconfigured IT system, environment settings or lack of adequate safeguards could also lead to sensitive data being included in publicly accessible reports or dashboards.

Unintended Decisions

AI tools make decisions based on complex algorithms and data inputs. While this can streamline processes, remove mundane tasks, and optimize operational costs, it also brings about a risk of unintended outcomes. If an AI tool misinterprets data or encounters a situation not covered in its training data, it might yield inaccurate or inappropriate results. These errors can lead to strategic missteps, operational or financial issues, or flawed customer interactions. Therefore, it’s vital that the results generated by AI tools are routinely scrutinized by human oversight and supplemented with robust checks to rectify any anomalies.

Security Vulnerabilities

In the end, AI tools are the same software as many others. As they become popular, they may become a favored for cybercriminals. Cybercriminals can exploit vulnerabilities in the tool or IT environment to gain unauthorized access. If an AI tool is compromised, attackers can use it to execute cyberattacks, manipulate data, or disrupt operations. As many other software or IT tools, protecting AI tools from cyber threats requires a multi-layered approach. Security professionals should consider regular software updates and penetration tests, robust access controls and incident response plans.

Misuse of Data

Finally, there’s a risk of misuse of data by the AI tools themselves. They could be intentionally or accidentally programmed to use the data to violate privacy regulations or ethical guidelines. For instance, they may use personal data to make predictions or decisions that may be discriminatory or invasive, leading to legal and ethical consequences for the organization.

Strategies to Prevent Sensitive Information Leaks

Whether you are developing or integrating AI tools to process corporate data, risks associated with exposing data should be appropriately managed. For every challenge that we shared above, there are steps organizations can take to mitigate the impact and reduce the likelihood and negative consequences. By strategically implementing these controls, leaders can confidently employ the power of AI while protecting their organization from potential pitfalls.

Below, we present a set of mitigation controls for each risk identified, providing a roadmap for C-level executives to navigate this complex landscape.

Mitigation Controls for Key Risks Related to Corporate Data Exposure to AI Tools

Robust AI Security Measures – Develop and implement security controls to protect organization’s sensitive data while using AI tools. Ensure staff is aware of the requirements and limitations of using AI tools.

Data handling process – Establish clear data handling and usage policies and processes for AI systems, those should include regular audits of the environment as well as algorithms to detect any misconfiguration, non-compliance, or data breaches.

Secure data processing environment – Whether data for AI tools used temporary or permanently, ensure that the environment that stores the data has robust security controls. Organizations should implement controls like access management, continuous monitoring and alerting, system patching.

Use data leak prevention tool – monitor and control data that is in use, in motion and at rest. Develop robust DLP rules and policies in alignment with the organization’s controls.

Advanced Anonymization Techniques – Use advanced anonymization techniques, such as differential privacy, to add an additional layer of protection to personal data.

Ethical AI Framework – Establish an ethical AI framework, including principles for fairness, transparency, and accountability, to guide the usage of AI tools.

Incident Response – Adopt a proactive approach to cybersecurity to anticipate potential attacks. Align the incident response plan to AI related incidents and develop response plans for effectively manage security incidents.

Regular Audits and Compliance Checks – Develop audit and compliance plans to conduct regular audits of AI systems. Ensure they are not using data inappropriately and meet all applicable legal and regulatory requirements.

AI landscape is growing rapidly and proactively managing associated risks is a business imperative. Leaders must prioritize the deployment of robust safeguards to protect the confidentiality, integrity and availability of data. Successfully implementing the mitigation controls discussed will provide a blueprint to successfully manage the risks and capitalize on the transformative potential of AI.

We recommend executives to consider these controls as part of their broader business strategy and risk management framework. To stay ahead, continuous evaluation of AI systems and their associated risks should become a standard part of business and security operations.

In case if you like to learn more about integrating AI risks with the corporate risk management, feel free to contact Muza Sakhaev, our Risk and Advisory Lead Consultant.